Intro

The Technology Core at HMS DBMI is dedicated to easing the infrastructure burden placed on the informatics researchers in the department, in addition to leading several of our own projects. As a way to address those burdens we've been joining forces with the faculty’s lead development staff to come up with generalizable needs. One big piece currently underway is auth/authz/registration services to consolidate identity and make it easy to protect applications and manage use and adoption of the platforms we create. Drawing on knowledge from industry best practices we also plan to establish a data lake to serve many of our data collection projects. Being an academic institution we'd love to share our methods so we'll try to blog about the work we are doing as we roll it out.

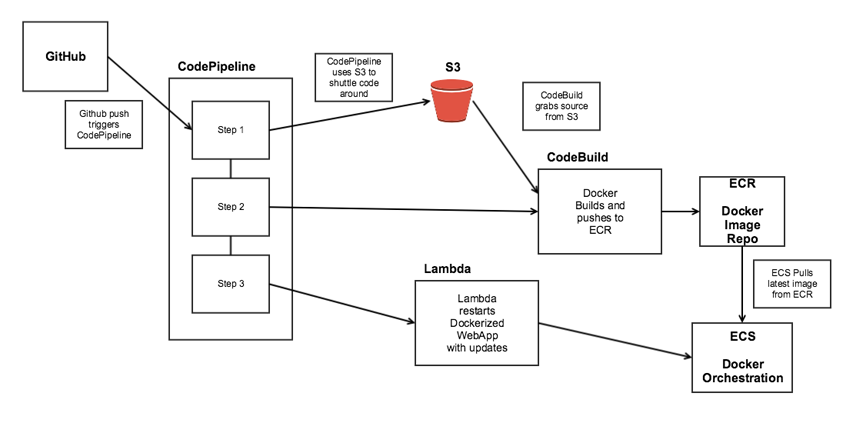

Today we’ll focus on some recent DevOps work, setting up automated deployments to Amazon Web Services (AWS)'s EC2 Container Service (ECS) via CodePipeline and all the services that go with it. The majority of our development work is in Python, and our examples reflect that.

For a variety of reasons that I believe are widely adopted, we are strict about scripting every change to our environments. The Python package boto3 is our workhorse when it comes to building AWS resources. We’ve built a set of scripts wrapping boto3 that are specific to our environments which end up being fairly straightforward to understand. At the end of the day I think readability dictated this instead of CloudFormation, which we would have likely been scripting the creation of in Python anyway.

Overview

At a high level, the whole process of deploying is controlled by CodePipeline. It's this service that reacts and starts the process when a push to a Github repo is detected. CodePipeline's 3 steps then kick off to:

- Pull source code

- Build a docker image and push it to an image repo

- Use a Lambda to relaunch the dockers containing the web servers

There are a few policies and roles that need to be created that allow AWS to utilize these functions. The sections below outline the role of the different steps in more detail and highlight some of the code required to create them.

CodeBuild

Relevant Code

This AWS service allows you to specify a server configuration and build steps with AWS taking care of the rest. As with using any of the AWS services, there are a few roles/permissions you need to set up before you begin.

The following policy sets permissions that CodeBuild needs in order to:

- read/write back and forth to S3 (Pulling code from Github with CodePipeline later places it in S3 for CodeBuild to use)

- write logs to CloudWatch

- because we are deploying to ECS, we need to push our Docker image to ECR

codebuild_service_role_policy =

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Resource": [

"arn:aws:logs:us-east-1:"ACCOUNT_ID":log-group:/aws/codebuild/" + project_name,

"arn:aws:logs:us-east-1:"ACCOUNT_ID":log-group:/aws/codebuild/" + project_name + ":*"

],

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

]

},

{

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::codepipeline-us-east-1-*"

],

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:GetObjectVersion"

]

},

{

"Action": [

"ecr:BatchCheckLayerAvailability",

"ecr:CompleteLayerUpload",

"ecr:GetAuthorizationToken",

"ecr:InitiateLayerUpload",

"ecr:PutImage",

"ecr:UploadLayerPart"

],

"Resource": "*",

"Effect": "Allow"

}

]

}

Below we will create the policy and a role, then attach the policy to the newly created role. We use this style for many different services, so we inject the project name everywhere.

try:

create_policy("CODEBUILD-" + project_name + "-SERVICE-POLICY", codebuild_service_role_policy,"CODEBUILD-" + project_name + "-SERVICE-ROLE")

except ClientError as e:

if e.response['Error']['Code'] == 'EntityAlreadyExists':

print("Object already exists")

else:

print("Unexpected error: %s" % e)

try:

create_role("CODEBUILD-" + project_name + "-SERVICE-ROLE", codebuild_trust_relationship)

except ClientError as e:

if e.response['Error']['Code'] == 'EntityAlreadyExists':

print("Object already exists")

else:

print("Unexpected error: %s" % e)

add_policy_to_role("CODEBUILD-" + project_name + "-SERVICE-ROLE", "arn:aws:iam::" + settings["ACCOUNT_NUMBER"] + ":policy/CODEBUILD-" + project_name + "-SERVICE-POLICY")

Creating the actual build project is straightforward: we specify the environment that AWS should spin up when it's time to do the build. We ask for a Linux Docker environment and pass in a set of environment variables. We use these variables in the build process to properly tag our image, and to know where to push it to.

try:

codebuild_client.create_project(name=project_name,

source={"type": "CODEPIPELINE"},

artifacts={"type": "CODEPIPELINE"},

environment={"type": "LINUX_CONTAINER",

"image": "aws/codebuild/docker:1.12.1",

"computeType": "BUILD_GENERAL1_MEDIUM",

"environmentVariables": [

{"name": "AWS_DEFAULT_REGION", "value": "us-east-1"},

{"name": "AWS_ACCOUNT_ID",

"value": settings["ACCOUNT_NUMBER"]},

{"name": "IMAGE_REPO_NAME", "value": task_name},

{"name": "IMAGE_TAG", "value": image_tag}]},

serviceRole="CODEBUILD-" + project_name + "-SERVICE-ROLE")

except ClientError as e:

if e.response['Error']['Code'] == 'ResourceAlreadyExistsException':

print("Duplication of object: %s" % e)

else:

print("Error: %s" % e)

The source code gets passed to CodeBuild through the magic of CodePipeline when we put it all together. The actual build instructions can be included in a few different ways; we simply add a .yml file to our projects. An example of the yml file can be found here: https://github.com/hms-dbmi/SciAuth/blob/master/buildspec.yml

Lambda-ECS Interaction

Relevant Code

Another resource we need to create is a Lambda function which will handle a few of the deployment steps related to ECS. Using boto3 within the Lambda's we'll create a new task definition and then force an ECS service update. The outline of the code that lives inside the lambda is below. It's important to mark the job as a pass or a failure so that CodePipeline knows whether to wait for it or not. The parameters we need to register our task and start the service get passed into the Lambda via CodePipeline. They are handed off as a chunk of JSON and parsed accordingly.

import boto3

import json

ecs_client = boto3.client('ecs')

codepipeline_client = boto3.client('codepipeline')

def lambda_handler(event, context):

event_from_pipeline = event["CodePipeline.job"]["data"]["actionConfiguration"]["configuration"]["UserParameters"]

decoded_params = json.loads(event_from_pipeline)

try:

environment = decoded_params["ENVIRONMENT"]

taskname = decoded_params["TASKNAME"]

...

ports = decoded_params["PORTS"]

except KeyError:

codepipeline_client.put_job_failure_result(

jobId=event["CodePipeline.job"]["id"],

failureDetails={"type": "ConfigurationError", "message": "Missing Parameters!"}

)

try:

if include_cloudwatch == "True":

container_definition[0]["logConfiguration"] = {

"logDriver": "awslogs",

"options": {

"awslogs-group": cloudwatch_group + environment.lower(),

"awslogs-region": "us-east-1",

"awslogs-stream-prefix": cloudwatch_prefix

}

}

except KeyError:

pass

print("Register task definition.")

ecs_client.register_task_definition(family=task_family + "-" + taskname,

taskRoleArn=task_role,

containerDefinitions=container_definition)

print("Update Service (" + cluster_name + "-" + taskname + ").")

ecs_client.update_service(cluster=cluster_name,

service=cluster_name + "-" + taskname,

taskDefinition=cluster_name + "-" + taskname)

codepipeline_client.put_job_success_result(

jobId=event["CodePipeline.job"]["id"]

)

return {

'message': "Success! Task Updated (" + cluster_name + "-" + taskname + ") in service " + cluster_name + "."

}

CodePipeline

Relevant Code

CodePipeline is an orchestration service with hooks for the tools in the deployment process (like Github, CodeBuild, Lambda). The code below creates the code pipeline resources with steps for pulling, building and deploying our applications.

The first step in CodePipeline is to pull code from Github. This is accomplished by passing in the name of the repository/branch/organization and an oauth token of a privileged user.

....

"actions": [

{

"runOrder": 1,

"actionTypeId": {

"category": "Source",

"provider": "GitHub",

"version": "1",

"owner": "ThirdParty"

},

"name": "Source",

"outputArtifacts": [

{

"name": artifact_name_to_pass

}

],

"configuration": {

"Branch": "development",

"OAuthToken": settings["GITHUB_OAUTH_TOKEN"],

"Owner": "hms-dbmi",

"Repo": settings["REPO_NAME_" + task_name]

},

"inputArtifacts": []

}

]

....

The second step is to use a CodeBuild project to create the Docker image from the previously pulled code. You'll need to have created the CodeBuild project beforehand so that CodePipeline can properly link it.

...

"actions": [

{

"runOrder": 1,

"actionTypeId": {

"category": "Build",

"provider": "CodeBuild",

"version": "1",

"owner": "AWS"

},

"name": "CodeBuild",

"outputArtifacts": [

{

"name": "AppName"

}

],

"configuration": {

"ProjectName": stack_name + "-" + task_name

},

"inputArtifacts": [

{

"name": artifact_name_to_pass

}

]

}

],

"name": "Build"

...

The third step is the deployment. Here we call a Lambda function which uses some methods built into ECS to trigger the tasks to refresh with the latest version in ECR.

{

"name": "Deploy",

"actions": [

{

"outputArtifacts": [],

"inputArtifacts": [],

"runOrder": 1,

"actionTypeId": {

"version": "1",

"category": "Invoke",

"provider": "Lambda",

"owner": "AWS"

},

"name": "Deploy_Lambda",

"configuration": {

"FunctionName": "ecs_update",

"UserParameters": json.dumps(update_ecs_lambda_parameters)

}

}

]

}

Conclusion

And that's more or less the gist of it! CodePipeline handles artifact routing and job status tracking. CodeBuild has a variety of options you can play with and the ability to use yaml files opens up more possibilities. In a future post we will detail how we use the ECS services to host our dockerized environments.